Description of the hands-on application¶

- You can download the source code needed to follow this Hands-On from GitHub Hands-On Developer Guide

The sample app contains the following folder structure, which is shown in the figure Folder structure of your first Industrial Edge app

All the required folders of your first app are found in the "solution/HandsOn_1" folder.

Description of Mosquitto MQTT broker¶

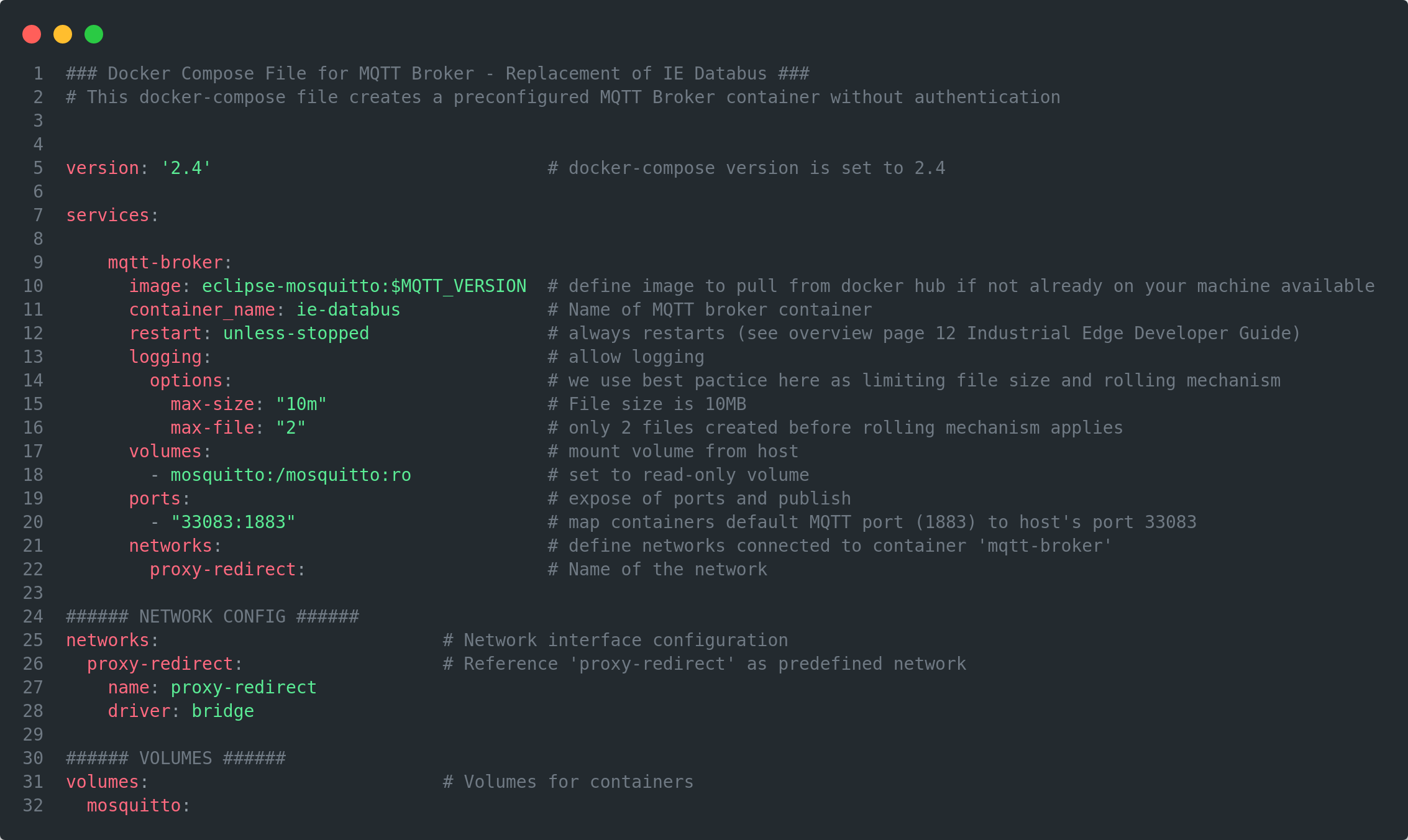

The Mosquitto MQTT broker container is the Databus replacement on the development machine. All necessary files for the MQTT broker container are placed in the 'mqtt_broker_mosquitto' folder.

It contains a docker-compose.yml and a hidden .env file.

It uses a predefined package, which is initially loaded from DockerHub of the 'eclipse-mosquitto' in the corresponding version. The container name is the same as the Databus with 'ie_databus' on the IED. The network interface is the 'proxy-redirect', which is marked as an external bridged Docker network as it already exists on the IED.

You need to create 'proxy-redirect' manually via the terminal, before starting any containers:

# create proxy-redirect network

docker network create proxy-redirect

Description of the Node-RED¶

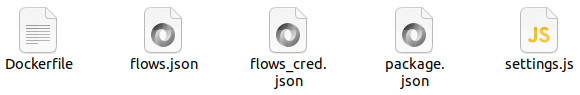

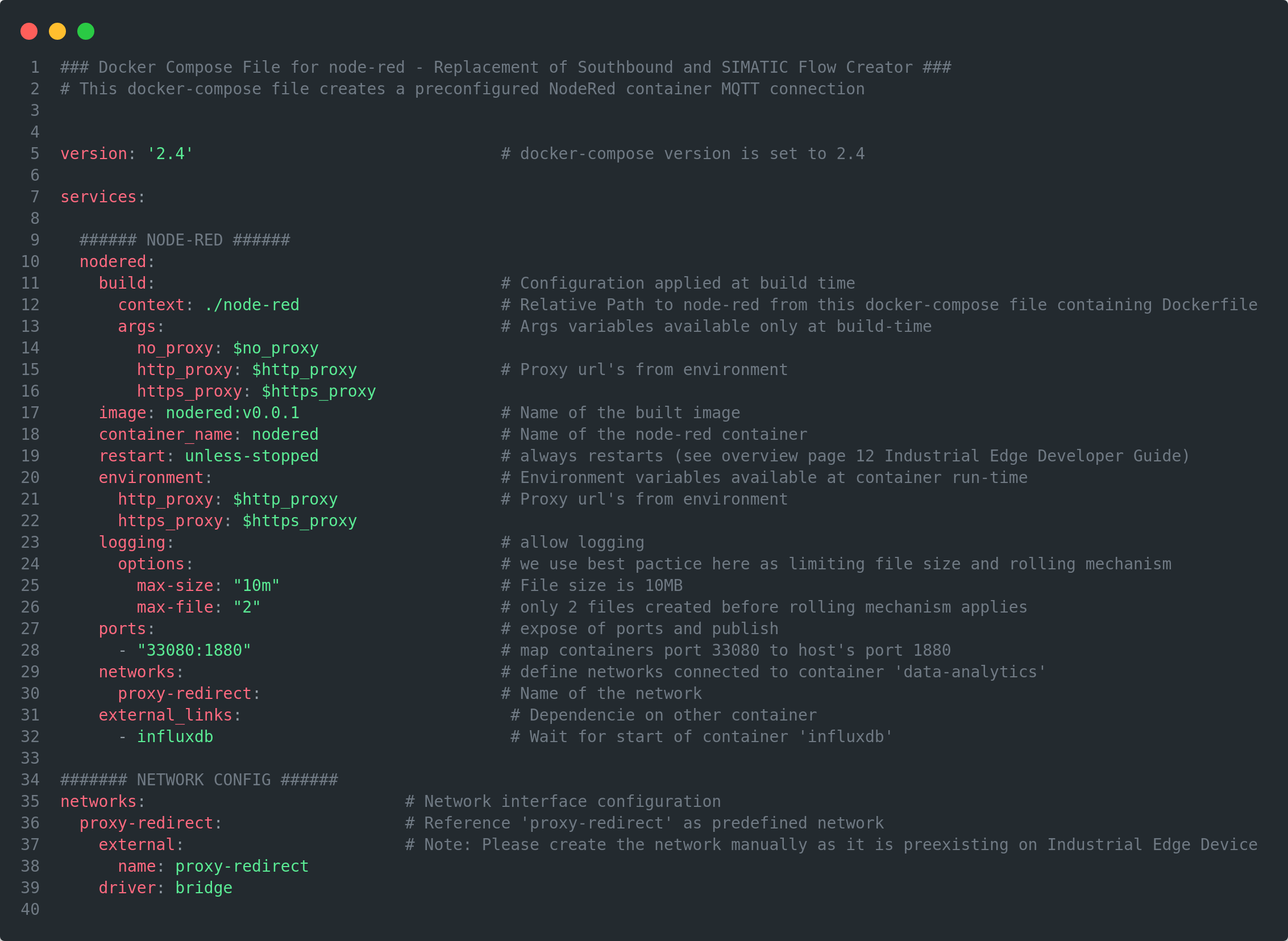

The Node-RED is the replacement of the SIMATIC S7 Connector and SIMATIC Flow Creator on the IED. All the required files for the Node-RED container are in the 'node_red' folder.

It contains a docker-compose.yml file, (hidden) .env file and another folder 'node-red'.

The Dockerfile in the figure Additional files for Node-RED container specifies how to build the image of the Node-RED container. It describes which version of Node-RED is used and which plugins will be loaded, as well how to perform configurations such as copying the example workflow.

The 'flows.json' file contains the app flow of our data acquisition, distribution to 'data-analytics' as well as storage in the Influx database. The flow will be described in more detail in the next section.

The docker-compose.yml contains a build step to build the Node-RED image from the Dockerfile. It is necessary to expose the ports for Node-RED to access the web view of Node-RED for accessing the flow. It has the same network interface as the MQTT broker. The 'nodered' container waits for the 'influxdb' container to be started before starting itself.

Difference between HTTP and HTTPS

Please be aware of the variables for the HTTP and HTTPS proxy. You must set them in the .env file. The default value is empty, which means no proxy is used. Please configure your proxy if you use one, e.g. as in most company network environments.

Description of your first 'Industrial Edge' app¶

This is the app that will be published to the IEM and deployed on IEDs. All the files that are required for your first IE app are found in the 'my_edge_app' folder.

The 'my_edge_app' folder contains the following files:

-

docker-compose.yml: This is the docker-compose.yml to create the containers for the development environment and the image for the Industrial Edge.

-

docker-compose_Edge.yml: This is the adapted docker-compose.yml file, which will be used later for uploading your first app to the IEM with the IEAP.

-

data-analytics folder: This folder contains the Python app for doing scientific calculations with the collected and preprocessed data. It also contains the Dockerfile to build the corresponding image.

The 'data-analytics' is built by the Dockerfile in the 'data_analytics' folder. All Python library dependencies are loaded from 'requirements.txt' and added to the base package. It uses a basic Python image from the environment variables in the hidden .env file. It copies all Python files from the 'program' folder to the container's execution environment and runs 'app.py' when the container starts.

The 'app.py' calls the main methods of 'data_analytics.py'. The 'data_analytics.py' contains the scientific calculations and a MQTT client for receiving and sending data.

Introduction to Data Analytics app¶

The main points about the 'data_analytics.py' code will be described in order to provide a short introduction.

The file contains some important constants, which are used in the 'DataAnalyzer' class itself.

BROKER_ADDRESS='ie-databus'

BROKER_PORT=1883

MICRO_SERVICE_NAME = 'data-analytics'

USERNAME='edge'

PASSWORD='edge'

The first two constants describe the address of the MQTT broker and the port. The username and password are the user and its password for authentication against the broker. This is important for the Databus on the IED later.

Handle Data function¶

def handle_data(self):

"""

Starts the connection to MQTT broker and subscribes to topics.

"""

self.logger.info('Preparing Mqtt Connection')

try:

self.client.username_pw_set(USERNAME, PASSWORD)

self.client.connect(BROKER_ADDRESS)

self.client.loop_start()

self.logger.info('Subscribe to topic StandardKpis')

self.subscribe(topic='StandardKpis', callback=self.standard_kpis)

self.logger.info('Subscribe to topic Mean')

self.subscribe(topic='Mean', callback=self.power_mean)

self.logger.info('Finished subscription to topics')

except Exception as e:

self.logger.error(str(e))

The 'handle data' method is in charge of establishing the connection between the MQTT client and the broker. It first sets the client's credentials before attempting to establish a connection. The client subscribes to the two input topics with its corresponding callback methods as soon as a connection can be made. Data generated in Node-RED will be used and published on these two topics.

Standard KPI function¶

The callback method 'standard_kpis' gets the power measurements of drive 1 and 2. It calculates the mean, median and standard deviation of each power measurement array. The result is packed in a json structure and published by the MQTT client on the result topic for Node-RED.

# Callback function for MQTT topic 'StandardKpis'

def standard_kpis(self, payload):

values = [key['value'] for key in payload]

# Calculate standard KPIs

result = {

'mean_result' : statistics.mean(values),

'median_result' : statistics.median(values),

'stddev_result' : statistics.stdev(values),

'name' : payload[0]['name'],

}

self.logger.info('mean calculated: {}'.format(statistics.mean(values)))

self.logger.info('median calculated: {}'.format(statistics.median(values)))

self.logger.info('stddev calculated: {} \n ======='.format(statistics.stdev(values)))

# publish results back on MQTT topic 'StandardKpiResult'

self.client.publish(topic='StandardKpiResult', payload=json.dumps(result))

return

Power Mean function¶

The callback method 'power_mean' is called when a message is received on the 'Mean' topic. On this topic, a json object is received with two arrays containing the drive 3's voltage and current values. The total power is calculated over all array items of voltage and corresponding current. After this is done, the mean value of the power of drive 3 is calculated. The result is packed in a json object and published by the MQTT client on the result topic back to the Node-RED.

# Callback function of MQTT topic 'Mean' subscription

def power_mean(self, payload):

self.logger.info('calculating sliding mean...')

current_values = [item['value'] for item in payload['current_drive3_batch']]

voltage_values = [item['value'] for item in payload['voltage_drive3_batch']]

# Calculate mean of power

power_batch_sum = sum([current*voltage for current, voltage in zip(current_values,voltage_values)])

power_mean = round((power_batch_sum/payload['sample_number']),2)

self.logger.info("sliding mean result: {}\n".format(power_mean))

result = {

'power_mean_result' : power_mean,

'name' : 'powerdrive3_mean',

}

# publish result back on MQTT topic 'MeanResult'

self.client.publish(topic='MeanResult', payload=json.dumps(result))

return

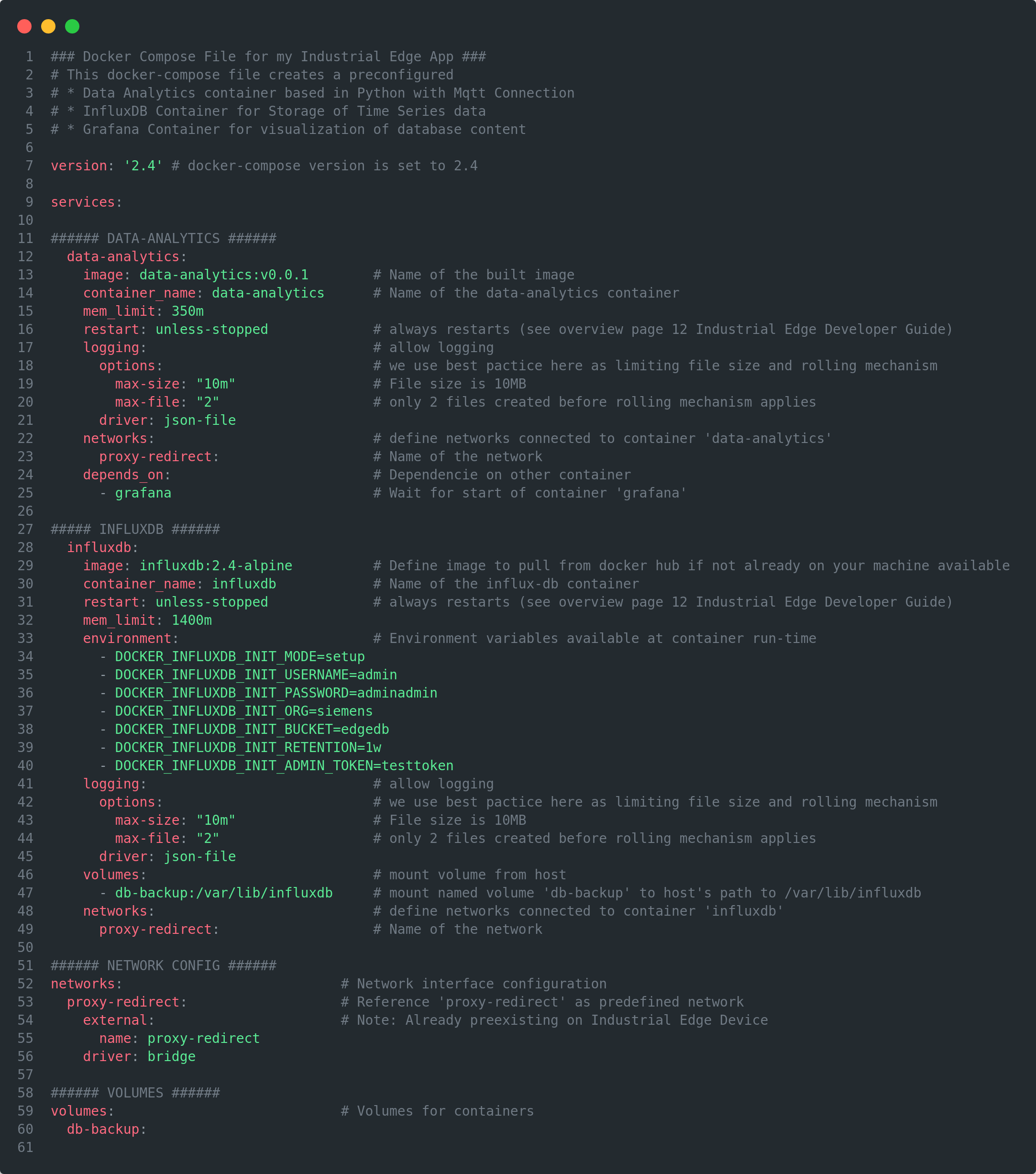

Overview of the Docker-Compose file¶

The docker-compose.yml is used to build the containers.

The docker-compose.yml contains two service descriptions for 'data-analytics' and 'influxdb'.

- influxdb:

The service description will pull an InfluxDB image from DockerHub with the corresponding version. A volume is mounted to this container to back up the database. The port for the interaction with the database is exposed to 8086. It uses the bridged network 'proxy-redirect'.

- data-analytics:

The service description contains a build step for the Dockerfile to build the image. It uses the bridged network 'proxy-redirect'.

Difference between HTTP and HTTPS

Please be aware of the variables for the HTTP and HTTPS proxy. You must set them in the .env file. The default value is empty, which means no proxy is used. Please configure your proxy if you use one, e.g. as in most company network environments.